API xR Calibration

This topic covers the basic steps of aligning the physical and virtual worlds within Designer, using the interface within .

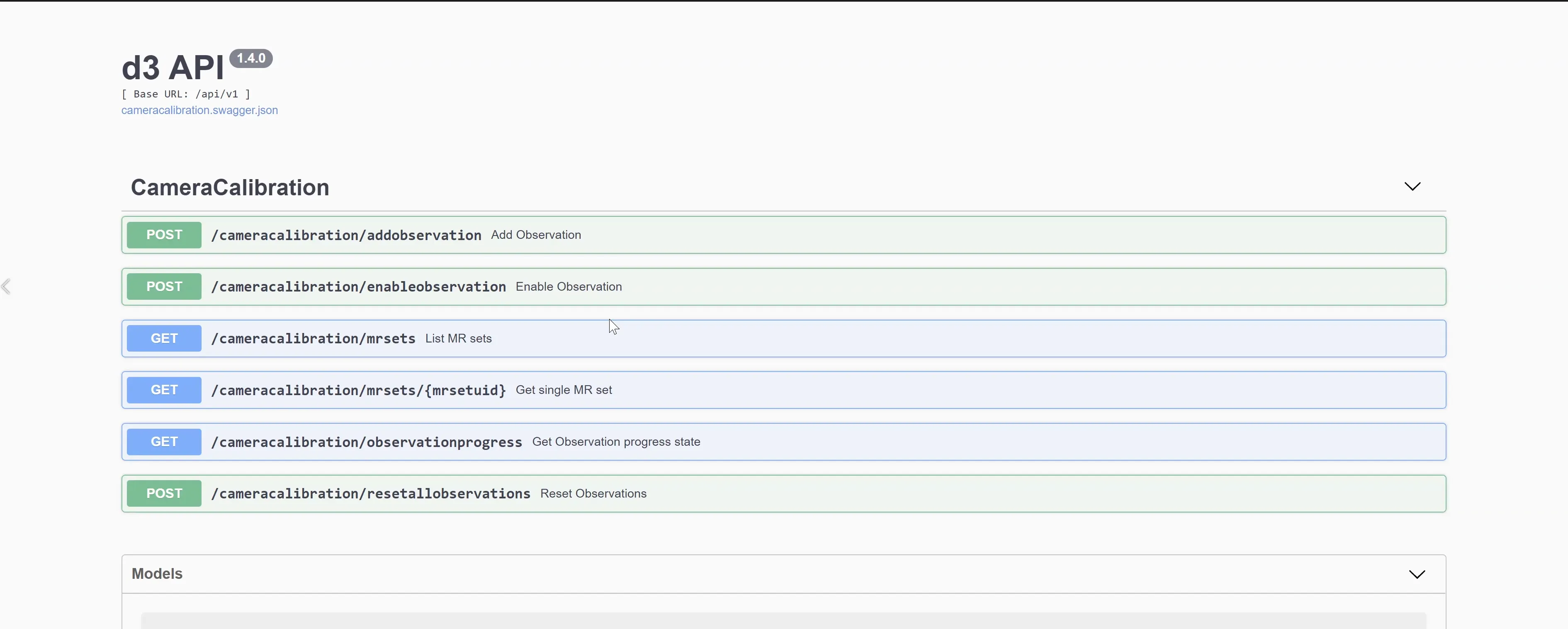

What is the API?

The is an application programming interface within accessible via d3Net that allows direct access to specific software functionality within the software.

r20 introduced the ability to perform an spatial calibration directly from the , allowing remote access to the functionality while reducing

the number of people required to execute this process to one.

To access the , navigate to the base URL

http://localhost/docs/v1/index.html

Workflow

A well calibrated stage will reveal no seams or visual artifacts that break the seamless blend between the real and virtual environments, and with adequate preparation, can be fully calibrated in less than a few hours.

Prior to beginning spatial calibration, ensure that:

- A camera tracking system is set up and receiving reliable data

- The xR project has been configured with an MR set, accurate OBJ models of the LEDscreens, and tracked cameras with video inputs assigned.

- The cameras, LED processors, and all servers are receiving the same genlock signal.

- The feed outputs have been configured and confirmed working.

- The Delay Calibration has been completed.

For information on calibration tips & tricks, please visit the xR Hub of the community portal.

A Calibration is a set of data that is contained inside of an individual camera object. The calibration process uses tracking data as a base against structured light patterns to determine the reality in relation to the raw tracking data.

An observation is a set of images of the stage captured by the camera of white dots on a black background (called structured light.) The number of dots and their size/spacing is determined by the user.

- Observations are used as data points for the predefined algorithm Disguise will use to align the tracked camera with the stage and set up the lens characteristics.

There are two types of observations used in the process: Primary and Secondary (P and S).

-

Primary observations are the positional or spatial observations used to align the real world and virtual cameras. A minimum five Primary observations is required for the solving method to compute, so aim for five good observations when you begin your calibration process. Primary or secondary status is defined by the most common zoom and focus values in the pool of observations.

-

Secondary observations comprise the zoom and focus data that creates the lens intrinsics file. Each new zoom and focus position will create a new Lens Pose.

There is no need to assign a zero point in Designer. Minimal offsets and transforms should be applied to align the tracking data and Disguise origin point prior to starting the Primary calibration.

Start by taking primary observations at a single locked zoom and focus level. This Primary Calibration will calibrate the offsets between the tracking system and Disguise’s coordinate system.

-

After each observation, the alignment should look good from the current camera position. If the alignment begins to fail, review all observations and remove suboptimal ones.

-

The Secondary calibration calibrates the zoom and focus data. This will align the virtual content and the real life camera zoom and focal changes.

A Lens Pose is the result of the data captured in the observation process. They are different checkpoints that Disguise will intelligently interpolate between. The number of lens poses you will end up with will be dependent on the range of your camera’s lens.

- A new lens pose is created for every new combination of zoom and focus values. The most common zoom/focus combination will be the Primary lens pose, attributed to the Primary calibration.

For information on calibration tips & tricks, please visit the xR Hub of the community portal.